Even at the state and local level, they have suffered significant setbacks,” Ball stated in an interview with TechCrunch, likely referring to California’s contentious AI safety bill SB 1047.

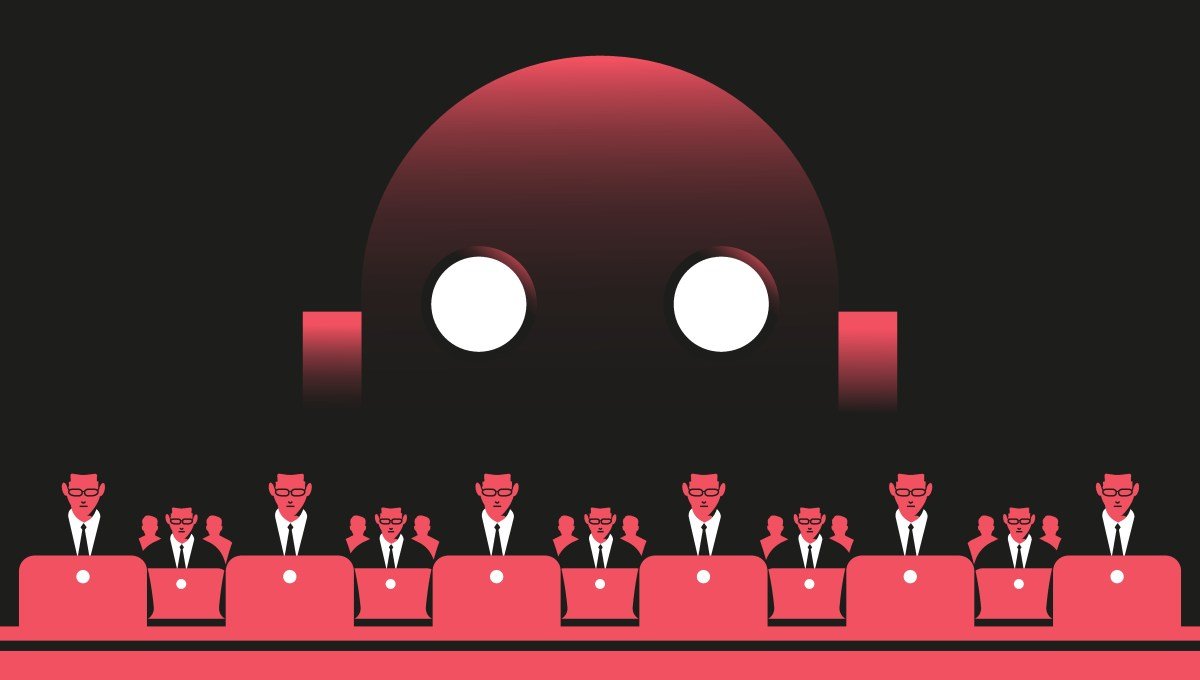

Part of the reason why AI doom fell out of favor in 2024 was simply that as AI models gained in popularity, their limitations and inadequacies became more apparent. For years now, tech experts have been sounding the alarm about the potential dangers of advanced AI systems causing catastrophic harm to humanity.

In 2024, the concerned voices were overshadowed by a more optimistic outlook on generative AI endorsed by the tech industry, a vision that also lined their pockets.

Those who warn of catastrophic AI risks are often labeled as “AI doomers,” a term they do not particularly appreciate né?. This approach, he argued, would prevent AI from being monopolized by a few powerful entities and enable the United States to effectively compete with China.

While this approach would undoubtedly boost the profits of a16z’s numerous AI startups, some found his tech-optimism tactless in an era marked by significant income disparities, global health crises, and housing challenges.

While Andreessen does not always see eye to eye with Big Tech, profit-making unites the entire industry. He contended that Big Tech companies and startups should be permitted to develop AI rapidly and aggressively, with minimal regulatory hindrances né?. This year, a16z’s co-founders collaborated with Microsoft CEO Satya Nadella in penning a letter urging the government to refrain from regulating the AI industry.

Despite the frantic warnings issued in 2023, figures like Musk and other technologists did not scale back their focus on safety in 2024; on the contrary, AI investments in 2024 surpassed previous records né?. In November 2023, the nonprofit board of OpenAI, the world’s premier AI developer, dismissed Sam Altman, citing concerns about his credibility and trustworthiness to oversee such a critical technology as artificial general intelligence (AGI) – a concept once seen as the ultimate goal of AI, denoting systems that possess self-awareness (even though the definition is evolving to align with the commercial interests of those discussing it).

For a moment, it seemed like the ambitions of Silicon Valley entrepreneurs would be tempered by considerations for the broader societal well-being.

However, to these entrepreneurs, the narrative surrounding AI doom was more disconcerting than the AI models themselves.

In response, Marc Andreessen, co-founder of a16z, penned a 7,000-word essay in June 2023 titled “Why AI will save the world,” dismantling the concerns of AI doomers and presenting a more hopeful perspective on the technology’s future.

In his closing remarks, Andreessen proposed a convenient solution to assuage our AI anxieties: move swiftly and disrupt existing norms – essentially advocating for the same ethos that has characterized the adoption of every 21st-century technology (along with its attendant challenges) né?. Andreessen revealed that he has been advising Trump on matters related to AI and technology in recent months with Sriram Krishnan a seasoned venture capitalist at a16z now serving as Trump’s official senior AI adviser.

Republicans in Washington have several priorities concerning AI that are now deemed more pressing than AI doom according to Dean Ball an AI-focused research fellow at George Mason University’s Mercatus Center né?. It becomes challenging to envision Google Gemini evolving into Skynet when it can’t even distinguish between pizza toppings.

Nevertheless, 2024 also witnessed AI products that seemed to bring science fiction concepts to life. While catastrophic AI risks draw inspiration largely from sci-fi films, the AI era is unveiling that some sci-fi ideas may not remain fictional forever.. For example, OpenAI demonstrated how we could interact with our phones without speaking, and Meta unveiled smart glasses with real-time visual recognition. Discussions on AI doom and AI safety – a broader topic covering concerns like algorithmic biases, insufficient content moderation, and other ways AI could harm society – transitioned from niche debates in San Francisco cafes to mainstream conversations on major news networks and publications like MSNBC, CNN, and The New York Times.

To summarize the alarms raised in 2023: Elon Musk, along with over a thousand technologists and scientists, called for a halt in AI development, urging the world to prepare for the profound risks associated with the technology né?. These include expanding data centers to power AI leveraging AI in government and military operations competing with China regulating content moderation by center-left tech companies and safeguarding children from AI chatbots.

“At the federal level I believe the movement to mitigate catastrophic AI risk has lost momentum. Subsequently, President Biden issued an executive order concerning AI with the overarching goal of safeguarding Americans from AI systems. Soon after, leading scientists from OpenAI, Google, and other labs signed an open letter stressing the importance of considering the risk of AI leading to human extinction né?. Altman swiftly resumed his position at the helm of OpenAI, although a group of safety researchers departed the organization in 2024, citing concerns about its diminishing safety culture.

Biden’s safety-oriented AI executive order has waned in popularity this year in Washington, D.C., with the incoming President-elect, Donald Trump, announcing intentions to revoke Biden’s order, arguing that it impedes AI innovation. They fear that AI systems may make lethal decisions, be exploited by the powerful to subjugate the masses, or contribute to the downfall of societies in various ways.

In 2023, it seemed like we were entering a renaissance period for technology regulations